On Wednesday, web infrastructure provider Cloudflare announced a new feature called “AI Labyrinth” that aims to combat unauthorized AI data scraping by serving fake AI-generated content to bots. The tool will attempt to thwart AI companies that crawl websites without permission to collect training data for large language models that power AI assistants like ChatGPT.

Cloudflare, founded in 2009, is probably best known as a company that provides infrastructure and security services for websites, particularly protection against distributed denial-of-service (DDoS) attacks and other malicious traffic.

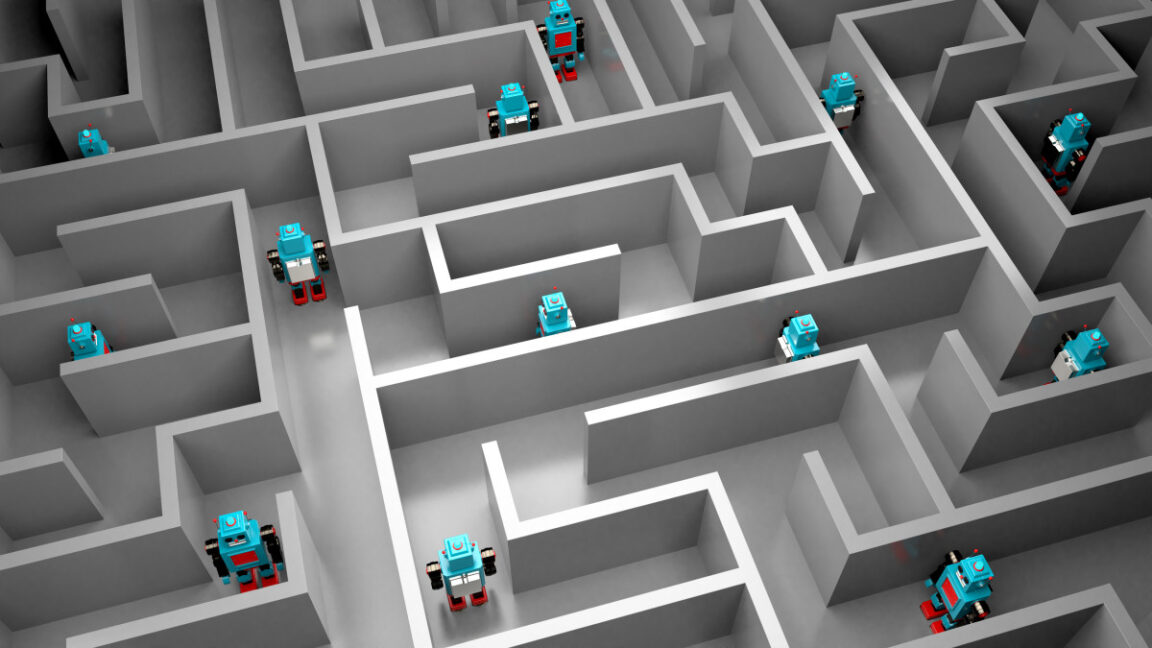

Instead of simply blocking bots, Cloudflare’s new system lures them into a “maze” of realistic-looking but irrelevant pages, wasting the crawler’s computing resources. The approach is a notable shift from the standard block-and-defend strategy used by most website protection services. Cloudflare says blocking bots sometimes backfires because it alerts the crawler’s operators that they’ve been detected.